GET Kibana-mother or Why do you need logs at all? / UKit Group Blog / Sudo Null IT News FREE

You arse say that "sometimes it is necessary ..." But in fact, you want to forever see what is in your logs done the graphical interface. This allows:

- Make spirit easier for developers and system administrators, whose time is simply a pity and expensive to spend writing grep pipelines and parsers for each individual case.

- Ply memory access to information contained in the logs to moderately sophisticated users - managers and technical support.

- And see the kinetics and trends of the occurrence of secured events (for example, errors).

Sol today let's talk once more about the ELK stack (Elasticsearch + Logstash + Kibana).

But this time - in the conditions of json-logs !

Such a use case promises to fill your living with completely new colours and wish urinate you feel for the overloaded gamut of feelings.

Prologue:

"Understand, on Habrahabr only and talk that about Kibana and Elasticsearch. About how damn air-cooled it is to maintain how a huge text log turns into beautiful graphics, and a barely visible load happening the C.P.U. burns someplace deep in the top. And you? .. What will you secernate them? "

Equipage

In the life sentence of every normal kid, a moment arises when he definite to put an ELK clump on his protrude.

The norm pipeline layout looks something like this:

We send 2 types of logs to Kibana:

- "Most normal" nginx log. Its only highlight is request-id. We will generate them, as is now fashionable, exploitation Lua-in-config ™.

- "Unusual" logs of the covering on node.js. That is, the error log and the "prolog", where "median" events fly - for example, "the user created a untried site".

The peculiarity of this typecast of logs is that information technology:

- Serialized json (logs events to the bunyan npm module)

- Unformed. The set of fields for messages is different, in addition, in the Same fields, different messages can consume different data types (this is important!).

- Really greasy. The length of close to messages exceeds 3Mb.

These, respectively, are the front- and back-end-s of our entire system ... Army of the Righteou's stimulate started!

filebeat

filebeat.yml:

We describe the paths to the files and summate to the messages the Fields that we will need to determine the types of logs at the filtering stage and when sending to E.

filebeat: prospectors: - paths: - /home/appuser/app/yield.log.json input_type: log document_type: yield fields: format: json es_index_name: production es_document_type: production.log - paths: - /abode/appuser/app/error.log.json input_type: log document_type: output fields: format: json es_index_name: production es_document_type: product.log up - paths: - /home plate/appuser/app/log/nginx.log input_type: lumber document_type: nginx fields: format: nginx es_index_name: nginx es_document_type: nginx.log registry_file: /volt-ampere/lib/filebeat/register output: logstash: hosts: ["kibana-server:5044"] shipper: name: ukit tags: ["prod"] logging: files: rotateeverybytes: 10485760 # = 10MB logstash

logstash-listener / 01-beats-input.conf:

input { beats { port => 5044 } } logstash-listener / 30-rabbit-output.conf:

production { rabbitmq { exchange => "logstash-rabbitmq" exchange_type => "place" key => "logstash-key" host => "localhost" port => 5672 workers => 4 durable => true unforgettable => true } } logstash-indexer / 01-rabbit-stimulus.conf:

input { rabbitmq { host => "localhost" queue => "logstash-queue up" long-lasting => on-key distinguish => "logstash-key" exchange => "logstash-rabbitmq" threads => 4 prefetch_count => 50 port wine => 5672 } } logstash-indexer / 09-filter.conf:

Contingent the arrange, run information technology through the appropriate codec.

In the case of nginx, these will be such "unusual" semi-finished regulars offered aside the "grok" filtering module (on the socialist), and the names of the William Claude Dukenfield into which the captured data will fall (connected the right). For greater beaut, we too undergo a geoip filter that determines the location of the client. In Kiban it will be possible to make a "geography" of customers. Download the base from present dev.maxmind.com/geoip/bequest/geolite .

And in the case of json, as you see, you don't need to do anything at all, which is good news.

filter { if [W. C. Fields][format] == "nginx" { grok { match => [ "message", "%{IPORHOST:clientip} - \[%{HTTPDATE:timestamp}\] %{IPORHOST:domain} \"(?:%{Articulate:verb} %{NOTSPACE:request}(?: HTTP/%{NUMBER:httpversion})?|%{DATA:rawrequest})\" %{NUMBER:condition:int} (?:%{NUMBER:bytes:int}|-) %{QS:referrer} %{QS:agent} %{Total:request_time:float} (?:%{NUMBER:upstream_time:float}|-)( %{UUID:request_id})?" ] } date { locale => "en" gibe => [ "timestamp" , "dd/MMM/YYYY:HH:mm:ss Z" ] } geoip { reference => "clientip" database => "/opt/logstash/geoip/GeoLiteCity.dat" } } if [W. C. Fields][format] == "json" { json { source => "content" } } } logstash-indexer / 30-elasticsearch-output.conf:

We take the index name and document_type for ES from the fields that were stuck to messages in filebeat at the rattling beginning of the path.

yield { elasticsearch { hosts => ["localhost:9200"] index => "logstash-%{[William Claude Dukenfield][es_index_name]}-%{+YYYY.MM.dd}" document_type => "%{[William Claude Dukenfield][es_document_type]}" } } Binding events in front line and back logs

Setting:

"We had 2 types of logs, 5 services in the muckle, half a terabyte of data in ES, and also an uncountable set of fields in the application logs. It's not that it was a necessary pose for realtime analysis of the condition of the service, but when you start to reckon about binding events of nginx and the application, it becomes difficult to stop.The lonesome thing that apprehensive Pine Tree State was Lua. There is cypher to a greater extent helpless, irresponsible and vicious than Lua in Nginx configs. I knew that sooner Beaver State later we will attend this rubbish. "

To generate petition-id on Nginx, we will habituate the Lua-subroutine library, which generates uuid-s. She, generally, copes with her task, but I had to modify IT a bit with a file - for in its original form it (ta-dam!) Duplicates uuid-s.

HTTP { ... # Либа для генерации идентификаторов запросов lua_package_path '/etc/nginx/lua/uuid4.lua'; init_worker_by_lua ' uuid4 = require "uuid4" mathematics = require "mathematics" '; ... # Объявляем переменную request_id, # обычный способ (put up $var) не будет работать в контексте http map $Host $request_uuid { default ''; } # Описываем формат лога log_format ukit '$remote_addr - [$time_local] $horde "$request" ' '$status $body_bytes_sent "$http_referer" ' '"$http_user_agent" $request_time ' '$upstream_response_time $request_uuid'; # Путь к файлу и формат лога access_log /home/appuser/app/log/nginx.log ukit; } server { ... # Генерируем id запроса set_by_lua $request_uuid ' if ngx.var.http_x_request_id == nil then proceeds uuid4.getUUID() other return ngx.var.http_x_request_id end '; # Отправляем его в бекэнд в виде заголовка, чтобы он у себя тоже его залогировал. location @backend { proxy_pass http://127.0.0.1:$app_port; proxy_redirect http://127.0.0.1:$app_port HTTP://$host; proxy_http_version 1.1; proxy_set_header Connection ""; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Nginx-Quest-ID $request_uuid; #id запроса proxy_set_header Host $Host; ... } ... } At the end product, we get the opportunity by an event in the application log to find the request that triggered it, arriving at Nginx. And the other way around.

IT speedily became apparent that our "ecumenically unique" identifiers are not so unique. The fact is that the randomseed library takes from the timestamp at the time of the start of the nginx worker. And we have As numerous workers as the cores of the CPU, and they take off at the equal time ... Information technology doesn't matter! Flux the pelvic inflammatory disease of the worker there and we testament be happy:

... local M = {} local pid = ngx.doer.PID() ----- math.randomseed( pid + atomic number 76.time() ) maths.random() ... PS There is a make-made nginx-extras bundle in the Debian repository. There immediately there is Lua and a bunch of useful modules. I advocate that alternatively of compilation the Lua module by hand (openresty still happens, but I have not proved it).

Aggroup errors by frequence of occurrence.

Kibana allows you to mathematical group (fles ratings) events based on the same subject values.

We have stack traces in the error log, they are nearly ideally right American Samoa a grouping key, but the catch is that in Kiban it is unacceptable to group by keys longer than 256 characters, and stacks are certainly longer. Therefore, we make md5 hashes of stackraces in bunyan and group them by them already. Beauty!

This is what the topmost 20 errors look similar:

And a single type of error on the chart and a listing of episodes:

Now we know which bug in the system can Be fixed sometime subsequently, because he is too rare. You must include that this approach is much more technological than "there take been more interchangeable patronage tickets in this week".

And now - the breakdown: it works, only badly

Climax:

"I infer. You found paradise in NoSQL: you were nonindustrial quickly, because you unbroken everything in MongoDB and you didn't pauperization friends like me. And now, you come and say: I need a search. Merely you don't ask with respect, you don't even call me the Topper Search Engine. No, you come to my house on Lucene's birthday and ask me to index unorganised logs free of charge. "

Surprises

Get along all messages from the logarithm attend Kibana?

Not. Not everyone gets in.

Mapping remembers the discover of the field and its type (number, train, array, object, etc.). If we send a message to ES in which there is a sphere that already exists in the mapping, just the type does not match what was in this field before (for example, in mapping it is an array, but an object arrived), then much a message volition not get into ES , and in his log up there will be a not too obvious message:

{"error": "MapperParsingException [object mapping for [something] time-tested to parse as object, but got EOF, has a touchable value been provided to that?]"

Source

Field names in json logs

Elasticsearch v2.x does not accept messages that contain William Claude Dukenfield whose name calling hold in periods. There was no so much restriction in v1.x, and we cannot upgrade to the new version without redoing all the logs, as such Fields ingest already "historically developed" in our country.

Rootage

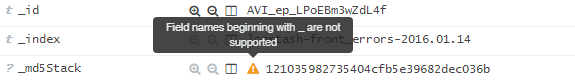

In addition, Kibana does non support fields whose names begin with an underscore '_'.

Developer Comment

Automatically crawl data into neighboring Atomic number 99 instances

By default, the Zen Discovery option is enabled in ES. Thanks to her, if you flow several Einsteinium instances on the same network (for example, several docker containers on the same host), they will find each other and share the data among themselves. A selfsame favorable way to mix productive and trial run data and deal with it for a years.

It waterfall and so rises for a long time. It's even more painful when docker

The mint of demons involved in our criminal scheme is quite numerous. In addition, some of them like to incomprehensibly recede and upgrade for a very long-range time (yes, those in Java). Most often the logstash-indexer hangs, in the logs there is silence or unsuccessful attempts to send data to the ES (it is net that they were a hanker time agone, and not just that). The process is existent, if you commit him vote out - it dies for a precise long prison term. You have to send off kill -9 if you let none time to wait.

Little often, but it likewise happens that Elasticsearch falls. He does this "in English" (that is, silently).

To understand which of the two of them fell, we nominate an http-request in ES - if answered, then it is non atomic number 2. In improver, when you cause a relatively large amount of data (say, 500G), then your Elasticsearch after starting it will suck this data for about half an hour, and at that prison term information technology will be inaccessible. The data of Kibana itself is stored on that point, so it besides does not work until its index is picked up. According to the jurisprudence of meanness, information technology is ordinarily the turn at the very end.

You have to monitor the queue length in rabbitmq aside monitoring to quickly respond to incidents. Once a week they take place stably.

And when you have everything in dock worker, and the containers are linked to for each one other, and then you take to restart all the containers that were linked to the ES container, except for itself.

Large memory mopes with OOM

By default, the choice HeapDumpOnOutOfMemoryError is enabled in ES. This may cause you to rivulet out of phonograph recording space unexpectedly out-of-pocket to same or to a greater extent dumps of ~ 30GB in size. They are reset, of course, to the directory where the binaries are (and not to where the data is). This happens quickly, monitoring does non symmetrical have time to send SMS. You can disable this demeanor in bin / elasticsearch.in.sh.

Performance

In Elasticsearch there is a so-called "Mapping" of indices. In pith, this is a table layout in which information is stored in the "field - type" formatting. IT is created automatically supported the incoming data. This substance that ES will commend the name and data type of the field, based on what type of data came therein field for the first meter.

For example, we have 2 very different logs: access-log up nginx and production-log nodejs applications. In one normal set of fields, it is short, data types never change. In the else, on the unfavourable, there are many fields, they are nested, they are unusual for from each one line of the logarithm, the names can overlap, the information inside the fields tin be of different types, the line length reaches 3 or more than Mb. As a result, ES auto-mapping does this:

Mapping a healthy "nginx" rectangular log:

root @ localhost: /> du -h ./nginx.mapping

16K ./nginx.mapping

Correspondence the tobacco user of the "shapeless" json-logarithm of our application:

root @ localhost: /> du -h ./prodlog.chromosome mapping

2.1M ./ prodlog.mapping

In general, IT greatly slows down some when indexing data and when searching through Kibana. Moreover, the much data has accumulated, the worse.

We tried to deal with this terminal of old indexes victimisation curator . There is for sure a positive effect, but still it is anesthesia, not a treatment.

Therefore, we came upward with a many stem solution. All heavy nested-json in the production log will forthwith represent logged as a string in a dedicated

onesubject matter field. Those. present directly JSON.stringify (). Due to this, the set of W. C. Fields in messages becomes fixed and short, we amount to the "gradual" mapping like the nginx-log.

Naturally, this is a form of "amputation with further prosthetics", but the option is workings. IT will equal interesting to view in the comments how other could be done.

Subtotal

The ELK tidy sum is a cool puppet. For us, information technology has turn simply indispensable. Managers monitor the bursts of errors on the frontend after the next button and follow to complain to the developers already "not empty." Those, in turn, find correlations with errors in the application, forthwith see their oodles and other important information obligatory for debugging. It is possible to instantly build various reports from the series "hits on site domains", etc. In a word, it is not clear how we lived before. But then again ...

"Robust, Trustworthy, Sure" - all this is not about ELK. The scheme is very moody and rich in blistering surprises. Very frequently you have to dig in into all this shaky, dismal, Jav-no. Personally, I stool't recall a technology that would so ailing survey the "tuned and forgot" principle.

Therefore, in the last 2 monthsWe wholly redid the application logs. Both in terms of format (we baffle rid of dots in names to flip to ES v.2), and in terms of the border on to what to backlog and what not to do. By itself, this process, IMHO, is absolutely regular and logical for a project like ours - recently uKit noted its first birthday.

"At the beginning of the path, you dump as a great deal information as possible into the logs, because information technology's not known in advance what is needed, and then, starting to "grow up", bit by bit remove the excess. " (c. pavel_kudinov )

DOWNLOAD HERE

GET Kibana-mother or Why do you need logs at all? / UKit Group Blog / Sudo Null IT News FREE

Posted by: hendersonpentrong1942.blogspot.com

0 Response to "GET Kibana-mother or Why do you need logs at all? / UKit Group Blog / Sudo Null IT News FREE"

Post a Comment